Why I disagree with Yann LeCun on whether LLMs could scale to AGI

Can today's large language models scale to AGI? Meta's Chief AI scientist, Yann LeCun, says no. I think his arguments are wrong.

LeCun's case boils down to four claims (full summary here):

- Machine learning is sample inefficient

- LLMs are trained on text, not videos, so don't have enough data

- Predicting the next token means there is an exponentially small chance for models to be right

- LLMs don't have system 2 ('deep') thinking

Let's go through each of these...

Machine learning is sample inefficient

LeCun argues models need many more examples to learn things compared to humans. However:

- In-context learning (a fancy name for 'prompting a trained AI with some examples') shows models can learn new tasks from a handful of examples.

- I think this is a fair comparison as human learning isn't starting from scratch either. We have evolutionary priors baked in over millions of years of evolution (that might be more akin to LLM pre-training, which is more sample inefficient).

- Techniques to overcome sample inefficiency are rapidly evolving, particularly through synthetic data generation leveraging this in-context learning. Teacher models like Meta's own Llama 4 Behemoth can create many more training samples to mitigate these bottlenecks.

- The sheer volume of data available for pre-training dwarfs what any human could process in a lifetime. 500 hours of content is uploaded to YouTube every minute - far beyond what any human could ever watch. Even with inefficient learning algorithms, models can compensate through scale.

This doesn't seem like a fundamental barrier to scaling - just an engineering challenge already being actively addressed.

LLMs are trained on text, not videos, so don't have enough data

LeCun claims text-based training means AI models will process less data than a human does when learning, because video data (like our eyes see) contains so much more data. (E.g. 1 minute of video is about 10MB, whereas the complete works of Shakespeare are ~5MB)

However, all frontier LLMs are being trained on videos now! This includes Meta's own Llama 4 models, which LeCun would have been aware of when making this point.

Even if that wasn't happening, this point conflates data size with information content. Text is extraordinarily information-dense. For example, I think more would be conveyed in the complete works of Shakespeare as text, compared to half a TikTok video (which are about the same data size).

Aren't large language models text-only by definition?

Random nerdy fact: A "language" in computer science theory is sometimes taken to mean anything that can be modelled as a sequence of symbols. So arguably they are processing a language - that of tokens (which can come from text, video, audio etc.). (I couldn't find whether the etymology of 'language model' comes from this though).

Tokens can represent text, images, audio, or any data.What most people call LLMs today (OpenAI's ChatGPT, Anthropic's Claude, Google Deepmind's Gemini, and Meta's Llama models) all are multimodal. Meta calls their own Llama 4 multimodal model a large language model:

Prescribing a 'language model' is text-only becomes an argument over semantics. And if this is the route someone would want to go down, then my claim would be 'current frontier models like the ones listed above do not have this limitaton, whatever you want to call them'.

Predicting the next token means there is an exponentially small chance for models to be right

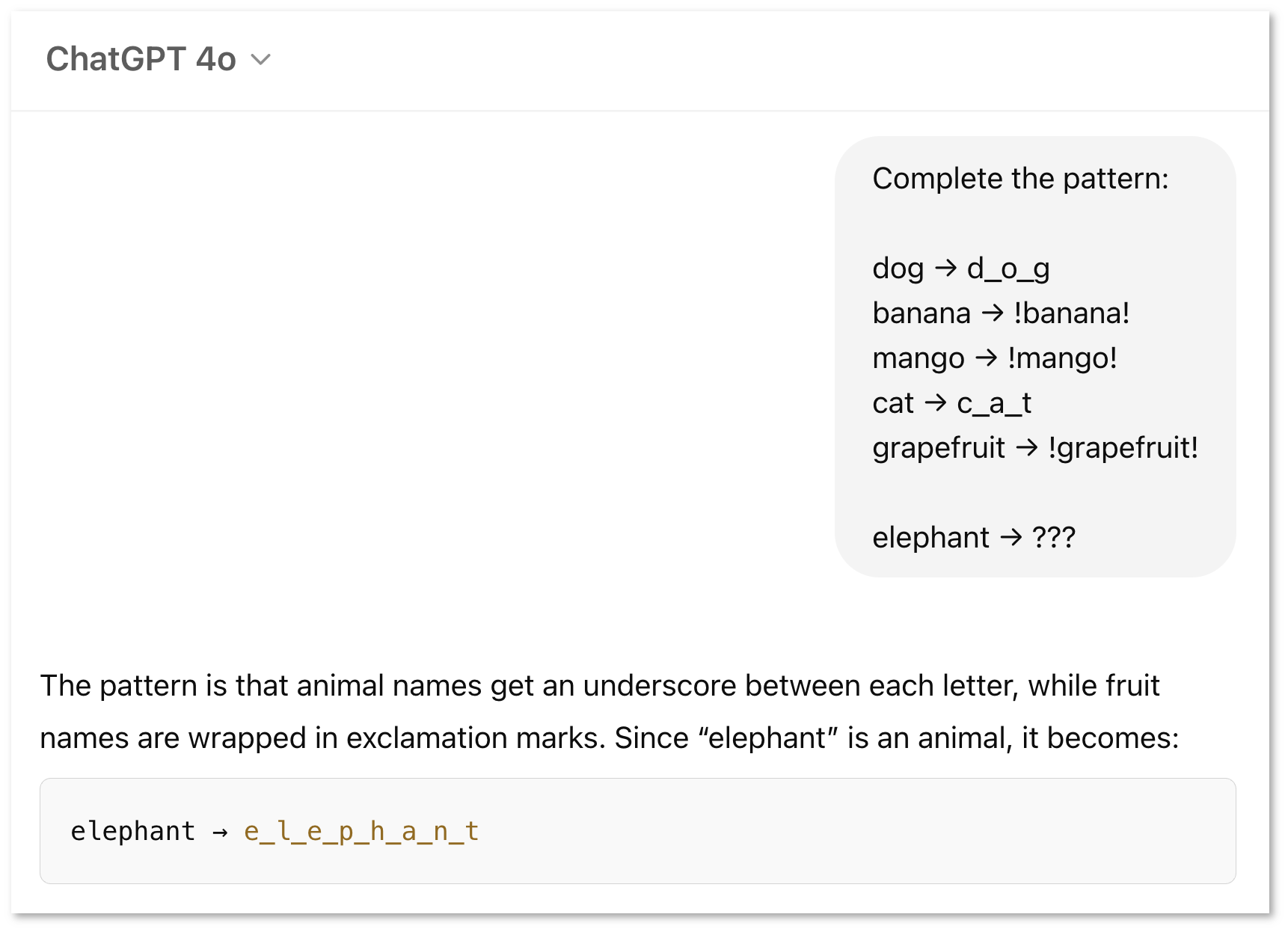

When training large language models, we use a loss function to tell our models how correct they are. In pre-training, being correct = predicting internet text exactly word-for-word. For example, if the text online is 'The cat sat on the mat', the model has to predict this exactly to get the best score on the loss function.

LeCun claims models will always be wrong, because there's so many chances for them to slip up.

However, reproducing existing text isn't our goal for AGI. Instead, all of the following would be ways to get to the same overall answer:

- A cat sat on the mat

- A cat was sitting on the mat

- On the mat, sat a cat

- A dog - oh no wait sorry I mean a cat - sat on the mat

This means there's many routes to the final destination, not one. And with self-correction, models can often get themselves back on track.

LLMs don't have system 2 ('deep') thinking

There is genuine uncertainty about whether this (or something like it) could cause AI progress to plateau. But it doesn't seem to imply that LLMs are 'doomed' and can't scale to AGI.

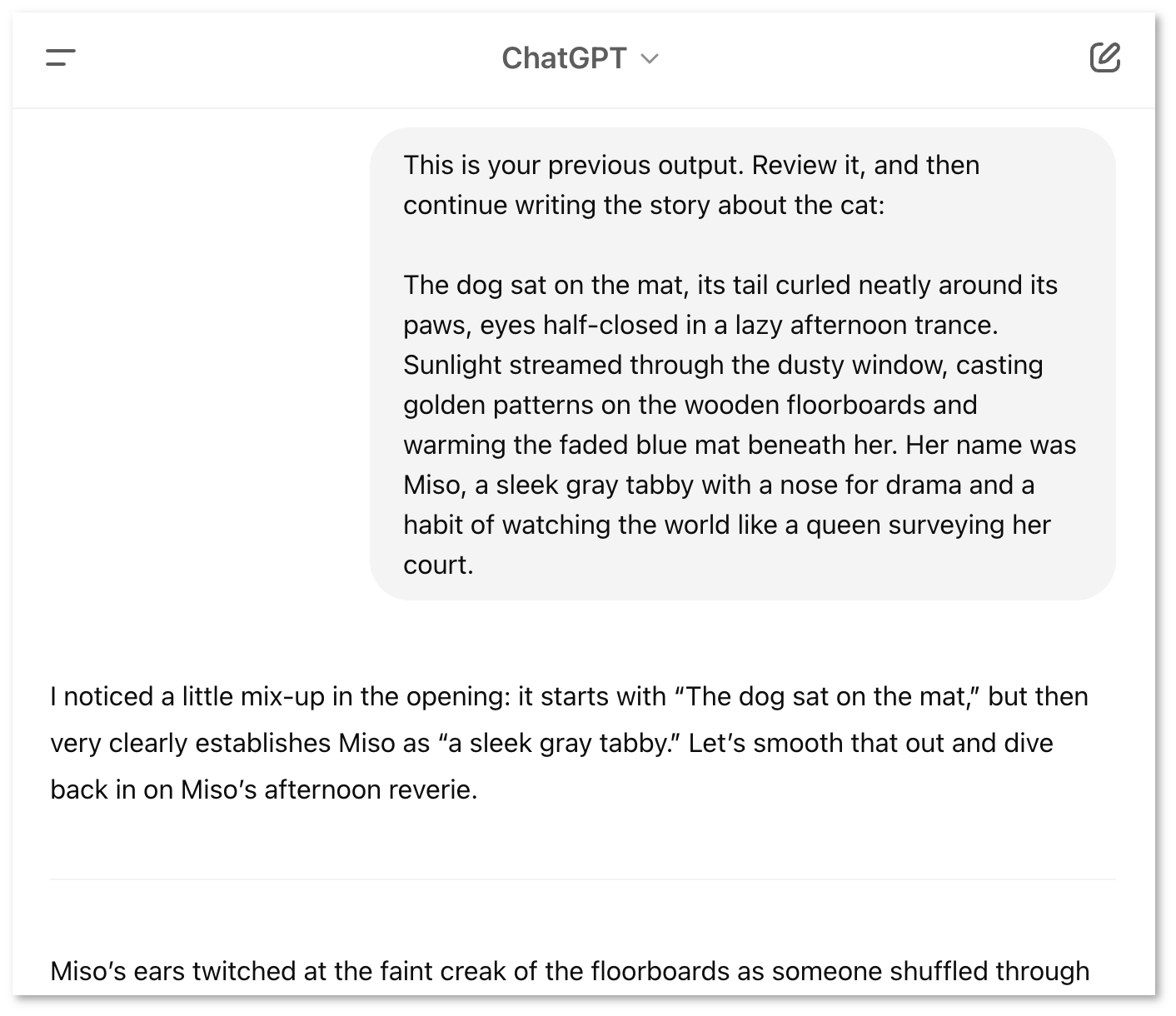

However, scaling alone has exceeded most previous bearish predictions about the kinds of reasoning LLMs can do. And it's not clear system 2 thinking is a 'real' barrier, any more than previous milestones thought to be barriers.

Furthermore, some AI techniques already allow models to 'think harder' on particular things. For example, chain-of-thought reasoning seems to demonstrate system 2-like capabilities.

What I believe on LLMs scaling to AGI

I believe the LLM architecture could scale to AGI with enough compute and data along the current trajectory (e.g. multimodal LLMs, tool use, agent loops, chain-of-thought inference-time scaling, reinforcement learning from self-play environments).

However, I agree with LeCun that scaling LLMs is unlikely to be the optimal path to safe AGI. It's plausible that there are better architectures we will discover before AGI, which might speed up progress even more.

To me, this means scaling LLMs sets an upper bound on how hard AGI is to achieve - all the more reason the world should be better prepared for such an important technology.

Footnotes

-

Random nerdy fact: A "language" in computer science theory is sometimes taken to mean anything that can be modelled as a sequence of symbols. So arguably they are processing a language - that of tokens (which can come from text, video, audio etc.). (I couldn't find whether the etymology of 'language model' comes from this though). ↩